Niels Thykier: debputy v0.1.21

Earlier today, I have just released debputy version 0.1.21

to Debian unstable. In the blog post, I will highlight some

of the new features.

Package boilerplate reduction with automatic relationship substvar

Last month, I started a discussion on rethinking how we do

relationship substvars such as the $ misc:Depends . These

generally ends up being boilerplate runes in the form of

Depends: $ misc:Depends , $ shlibs:Depends where you

as the packager has to remember exactly which runes apply

to your package.

My proposed solution was to automatically apply these substvars

and this feature has now been implemented in debputy. It is

also combined with the feature where essential packages should

use Pre-Depends by default for dpkg-shlibdeps related

dependencies.

I am quite excited about this feature, because I noticed with

libcleri that we are now down to 3-5 fields for defining

a simple library package. Especially since most C library

packages are trivial enough that debputy can auto-derive

them to be Multi-Arch: same.

As an example, the libcleric1 package is down to 3

fields (Package, Architecture, Description)

with Section and Priority being inherited from the

Source stanza. I have submitted a MR to show case the

boilerplate reduction at

https://salsa.debian.org/siridb-team/libcleri/-/merge_requests/3.

The removal of libcleric1 (= $ binary:Version ) in that MR

relies on another existing feature where debputy can auto-derive

a dependency between an arch:any -dev package and the library

package based on the .so symlink for the shared library.

The arch:any restriction comes from the fact that arch:all and

arch:any packages are not built together, so debputy cannot

reliably see across the package boundaries during the build (and

therefore refuses to do so at all).

Packages that have already migrated to debputy can use

debputy migrate-from-dh to detect any unnecessary

relationship substitution variables in case you want to clean

up. The removal of Multi-Arch: same and intra-source

dependencies must be done manually and so only be done so

when you have validated that it is safe and sane to do. I was

willing to do it for the show-case MR, but I am less confident

that would bother with these for existing packages in general.

Note: I summarized the discussion of the automatic relationship

substvar feature earlier this month in

https://lists.debian.org/debian-devel/2024/03/msg00030.html

for those who want more details.

PS: The automatic relationship substvars feature will also

appear in debhelper as a part of compat 14.

Language Server (LSP) and Linting

I have long been frustrated by our poor editor support for Debian packaging files.

To this end, I started working on a Language Server (LSP) feature in debputy

that would cover some of our standard Debian packaging files. This release

includes the first version of said language server, which covers the following

files:

Specifically for emacs, I also learned two things after the upload. First, you

can auto-activate eglot via eglot-ensure. This badly feature interacts with

imenu on debian/changelog for reasons I do not understand (causing a several

second start up delay until something times out), but it works fine for the other

formats. Oddly enough, opening a changelog file and then activating eglot does

not trigger this issue at all. In the next version, editor config for emacs will

auto-activate eglot on all files except debian/changelog.

The second thing is that if you install elpa-markdown-mode, emacs will accept

and process markdown in the hover documentation provided by the language server.

Accordingly, the editor config for emacs will also mention this package from

the next version on.

Finally, on a related note, Jelmer and I have been looking at moving some of this

logic into a new package called debpkg-metadata. The point being to support

easier reuse of linting and LSP related metadata - like pulling a list of known

fields for debian/control or sharing logic between lintian-brush and

debputy.

Most of the effort has been spent on the Deb822 based files such as debian/control, which comes with diagnostics, quickfixes, spellchecking (but only for relevant fields!), and completion suggestions. Since not everyone has a LSP capable editor and because sometimes you just want diagnostics without having to open each file in an editor, there is also a batch version for the diagnostics via debputy lint. Please see debputy(1) for how debputy lint compares with lintian if you are curious about which tool to use at what time. To help you getting started, there is a now debputy lsp editor-config command that can provide you with the relevant editor config glue. At the moment, emacs (via eglot) and vim with vim-youcompleteme are supported. For those that followed the previous blog posts on writing the language server, I would like to point out that the command line for running the language server has changed to debputy lsp server and you no longer have to tell which format it is. I have decided to make the language server a "polyglot" server for now, which I will hopefully not regret... Time will tell. :) Anyhow, to get started, you will want:

- debian/control

- debian/copyright (the machine readable variant)

- debian/changelog (mostly just spelling)

- debian/rules

- debian/debputy.manifest (syntax checks only; use debputy check-manifest for the full validation for now)

$ apt satisfy 'dh-debputy (>= 0.1.21~), python3-pygls'

# Optionally, for spellchecking

$ apt install python3-hunspell hunspell-en-us

# For emacs integration

$ apt install elpa-dpkg-dev-el markdown-mode-el

# For vim integration via vim-youcompleteme

$ apt install vim-youcompleteme

Minimal integration mode for Rules-Requires-Root

One of the original motivators for starting debputy was to be able to get rid of

fakeroot in our build process. While this is possible, debputy currently does

not support most of the complex packaging features such as maintscripts and debconf.

Unfortunately, the kind of packages that need fakeroot for static ownership tend

to also require very complex packaging features.

To bridge this gap, the new version of debputy supports a very minimal integration

with dh via the dh-sequence-zz-debputy-rrr. This integration mode keeps

the vast majority of debhelper sequence in place meaning most dh add-ons

will continue to work with dh-sequence-zz-debputy-rrr. The sequence only

replaces the following commands:

The installations feature of the manifest will be disabled in this integration mode to avoid feature interactions with debhelper tools that expect debian/<pkg> to contain the materialized package. On a related note, the debputy migrate-from-dh command now supports a --migration-target option, so you can choose the desired level of integration without doing code changes. The command will attempt to auto-detect the desired integration from existing package features such as a build-dependency on a relevant dh sequence, so you do not have to remember this new option every time once the migration has started. :)

- dh_fixperms

- dh_gencontrol

- dh_md5sums

- dh_builddeb

This article is cross-posting from grow-your-ideas. This is just an idea.

This article is cross-posting from grow-your-ideas. This is just an idea.

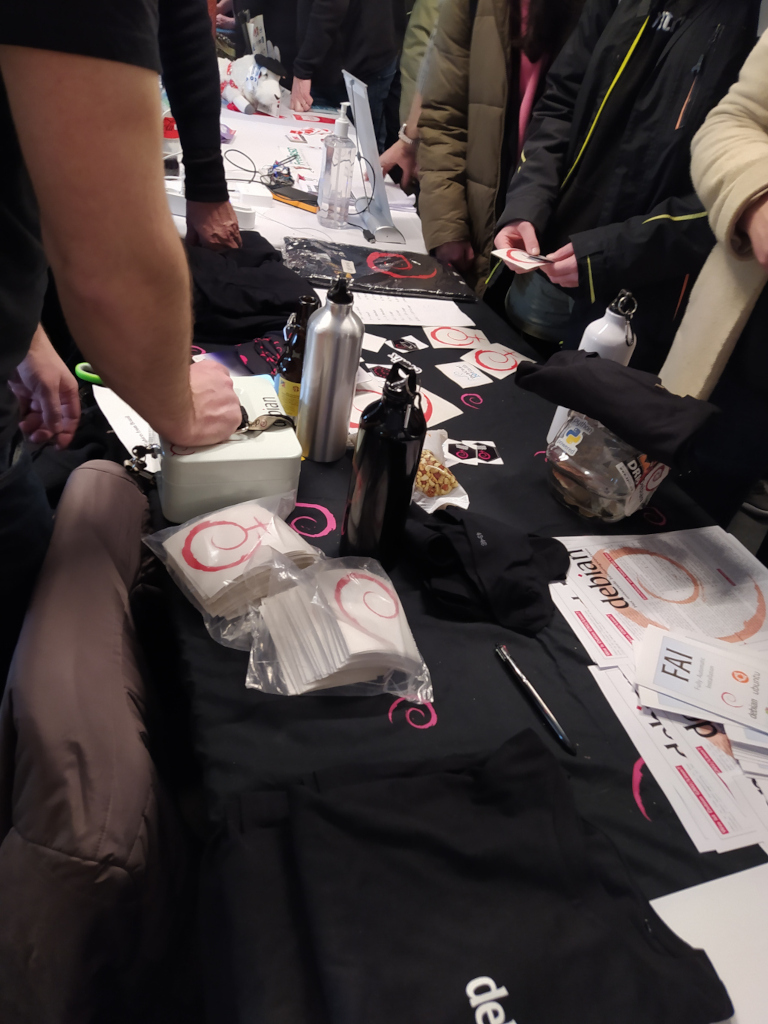

Last week we held our promised miniDebConf in Santa Fe City, Santa Fe province,

Argentina just across the river from Paran , where I have spent almost six

beautiful months I will never forget.

Last week we held our promised miniDebConf in Santa Fe City, Santa Fe province,

Argentina just across the river from Paran , where I have spent almost six

beautiful months I will never forget.

Closing arguments in the trial between various people and

Closing arguments in the trial between various people and

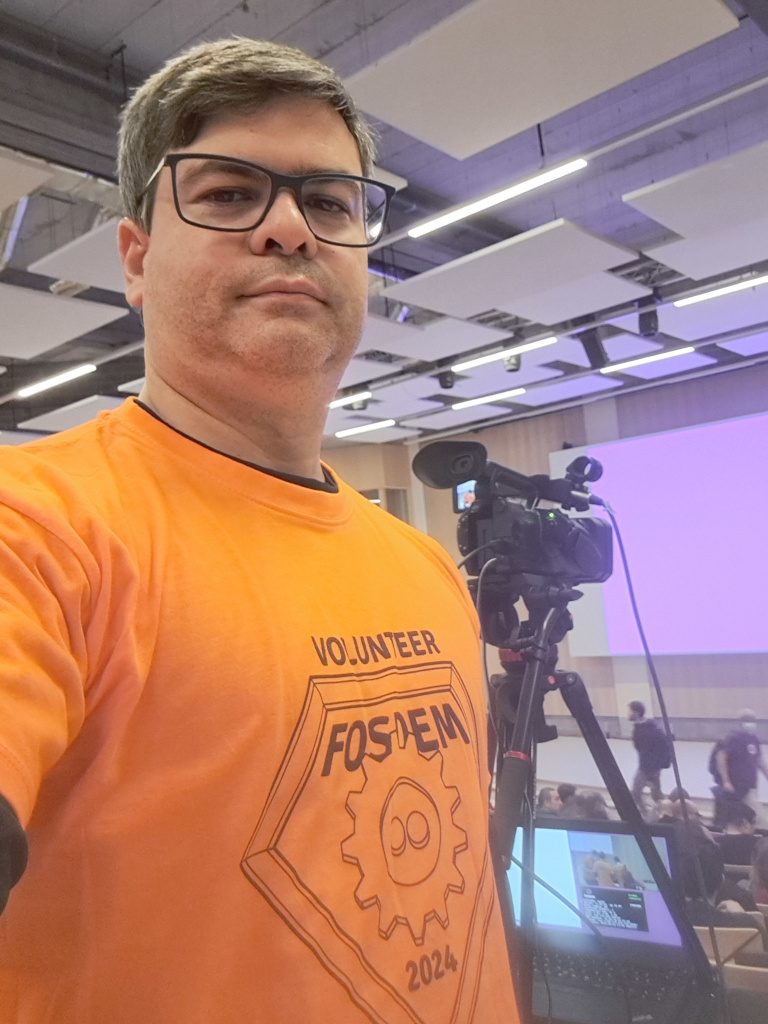

The talks held in the room were these below, and in each of them you can watch the recording video.

The talks held in the room were these below, and in each of them you can watch the recording video.

As there has been an increase in the number of proposals received, I believe that interest in the translations devroom is growing. So I intend to send the devroom proposal to FOSDEM 2025, and if it is accepted, wait for the future Debian Leader to approve helping me with the flight tickets again. We ll see.

As there has been an increase in the number of proposals received, I believe that interest in the translations devroom is growing. So I intend to send the devroom proposal to FOSDEM 2025, and if it is accepted, wait for the future Debian Leader to approve helping me with the flight tickets again. We ll see.

Two months into my

Two months into my

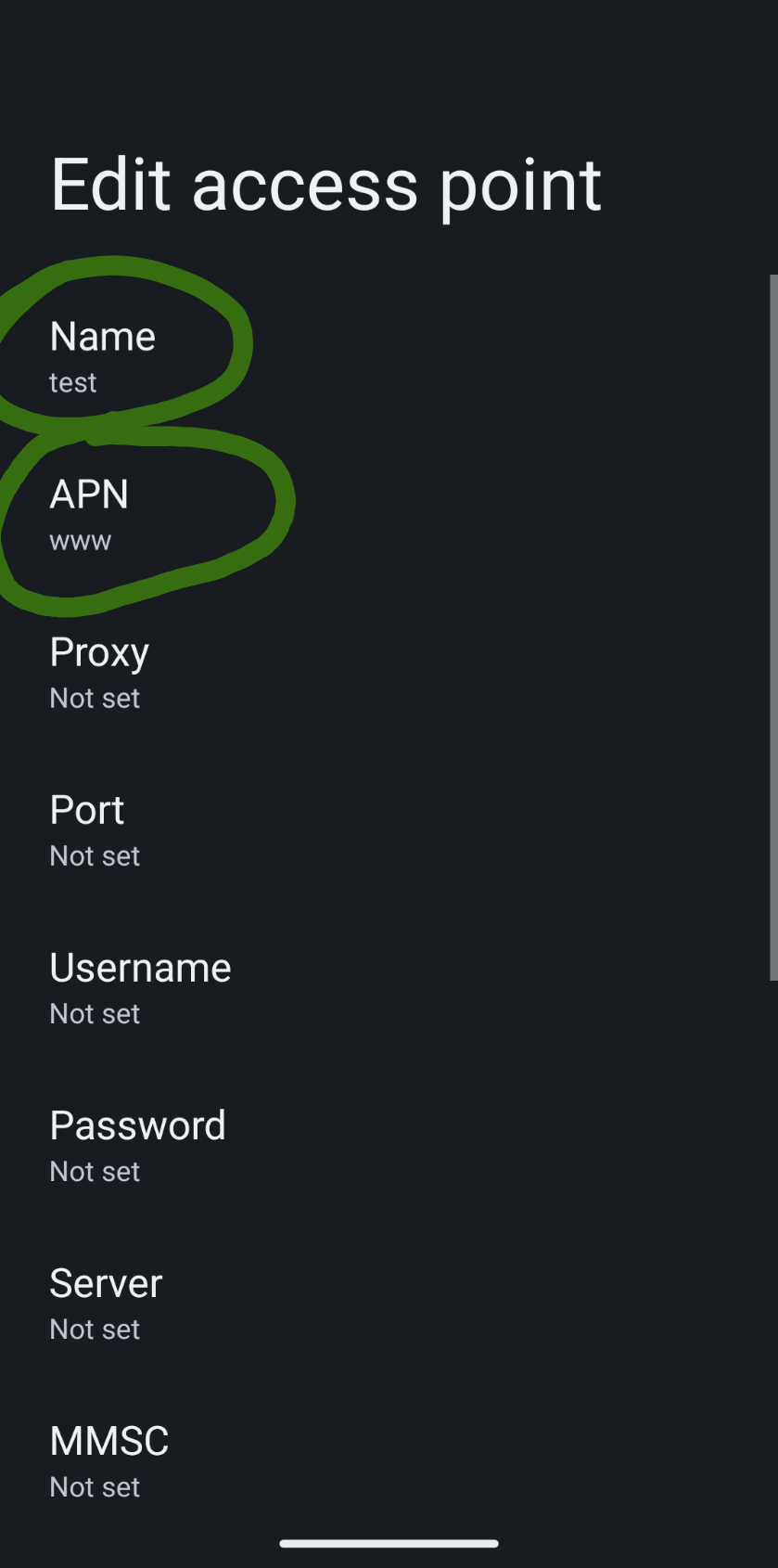

APN settings screenshot. Notice the circled entries.

APN settings screenshot. Notice the circled entries.

In late 2022 I prepared a batch of

In late 2022 I prepared a batch of  I started by looping 5 m of cord, making iirc 2 rounds of a loop about

the right size to go around the book with a bit of ease, then used the

ends as filler cords for a handle, wrapped them around the loop and

worked square knots all over them to make a handle.

Then I cut the rest of the cord into 40 pieces, each 4 m long, because I

had no idea how much I was going to need (spoiler: I successfully got it

wrong :D )

I joined the cords to the handle with lark head knots, 20 per side, and

then I started knotting without a plan or anything, alternating between

hitches and square knots, sometimes close together and sometimes leaving

some free cord between them.

And apparently I also completely forgot to take in-progress pictures.

I kept working on this for a few months, knotting a row or two now and

then, until the bag was long enough for the book, then I closed the

bottom by taking one cord from the front and the corresponding on the

back, knotting them together (I don t remember how) and finally I made a

rigid triangle of tight square knots with all of the cords,

progressively leaving out a cord from each side, and cutting it in a

fringe.

I then measured the remaining cords, and saw that the shortest ones were

about a meter long, but the longest ones were up to 3 meters, I could

have cut them much shorter at the beginning (and maybe added a couple

more cords). The leftovers will be used, in some way.

And then I postponed taking pictures of the finished object for a few

months.

I started by looping 5 m of cord, making iirc 2 rounds of a loop about

the right size to go around the book with a bit of ease, then used the

ends as filler cords for a handle, wrapped them around the loop and

worked square knots all over them to make a handle.

Then I cut the rest of the cord into 40 pieces, each 4 m long, because I

had no idea how much I was going to need (spoiler: I successfully got it

wrong :D )

I joined the cords to the handle with lark head knots, 20 per side, and

then I started knotting without a plan or anything, alternating between

hitches and square knots, sometimes close together and sometimes leaving

some free cord between them.

And apparently I also completely forgot to take in-progress pictures.

I kept working on this for a few months, knotting a row or two now and

then, until the bag was long enough for the book, then I closed the

bottom by taking one cord from the front and the corresponding on the

back, knotting them together (I don t remember how) and finally I made a

rigid triangle of tight square knots with all of the cords,

progressively leaving out a cord from each side, and cutting it in a

fringe.

I then measured the remaining cords, and saw that the shortest ones were

about a meter long, but the longest ones were up to 3 meters, I could

have cut them much shorter at the beginning (and maybe added a couple

more cords). The leftovers will be used, in some way.

And then I postponed taking pictures of the finished object for a few

months.

Now the result is functional, but I have to admit it is somewhat ugly:

not as much for the lack of a pattern (that I think came out quite fine)

but because of how irregular the knots are; I m not confident that the

next time I will be happy with their regularity, either, but I hope I

will improve, and that s one important thing.

And the other important thing is: I enjoyed making this, even if I kept

interrupting the work, and I think that there may be some other macrame

in my future.

Now the result is functional, but I have to admit it is somewhat ugly:

not as much for the lack of a pattern (that I think came out quite fine)

but because of how irregular the knots are; I m not confident that the

next time I will be happy with their regularity, either, but I hope I

will improve, and that s one important thing.

And the other important thing is: I enjoyed making this, even if I kept

interrupting the work, and I think that there may be some other macrame

in my future.

After seven years of service as member and secretary on the GHC Steering Committee, I have resigned from that role. So this is a good time to look back and retrace the formation of the GHC proposal process and committee.

In my memory, I helped define and shape the proposal process, optimizing it for effectiveness and throughput, but memory can be misleading, and judging from the paper trail in my email archives, this was indeed mostly Ben Gamari s and Richard Eisenberg s achievement: Already in Summer of 2016, Ben Gamari set up the

After seven years of service as member and secretary on the GHC Steering Committee, I have resigned from that role. So this is a good time to look back and retrace the formation of the GHC proposal process and committee.

In my memory, I helped define and shape the proposal process, optimizing it for effectiveness and throughput, but memory can be misleading, and judging from the paper trail in my email archives, this was indeed mostly Ben Gamari s and Richard Eisenberg s achievement: Already in Summer of 2016, Ben Gamari set up the  For the fifth year in a row, I've asked Soci t de Transport de Montr al,

Montreal's transit agency, for the foot traffic data of Montreal's subway.

By clicking on a subway station, you'll be redirected to a graph of the

station's foot traffic.

For the fifth year in a row, I've asked Soci t de Transport de Montr al,

Montreal's transit agency, for the foot traffic data of Montreal's subway.

By clicking on a subway station, you'll be redirected to a graph of the

station's foot traffic.